I recently got bitten by the Node.js bug. Hard.

If you are even remotely technically inclined, it is virtually impossible to not be seduced by the elegance, speed and low barrier to entry of getting started with Node.js. After all, its just another JavaScript API, only this time designed for running programs on the server. This in and of itself, is intriguing, but there has to be more, much more, to compel legions of open source developers to write entire libraries for Node almost over night, not to mention industry giants like Wal-Mart and Microsoft embracing this very new, fledgling technology that has yet to be proven over any significant period of time.

As intriguing as Node is, I have a hard time accepting things at face value that I don't fully understand. When I don’t understand something, I get very frustrated. This frustration usually motivates me to relentlessly attack the subject with varying degrees of success (and sometimes even more frustration!).

As such, this post is an attempt to collect my findings on how Node, and it’s alleged single-threaded approach to event-driven programming is different from what I know today. This is not a dissertation on event vs. thread-based concurrency or a detailed comparison of managed frameworks and Node.js. Instead, this is a naive attempt to capture my piecemeal understanding of how Node is different into a single post in hopes that comments and feedback will increase its accuracy in serving as a reference that helps to put in perspective for .NET developers how Node is different and what the hubbub is really all about.

To that end, I sincerely would appreciate your comments and feedback to enrich the quality of this post as I found resources on the web that tackle this specific topic severely lacking (or I am just too dense to figure it all out).

I’m going to skip the hello world code samples, or showing you how easy it is to spin up an HTTP or TCP server capable of handling a ridiculous amount of concurrent requests on a laptop because this is all well documented elsewhere. If you haven’t ever looked at Node before, check out the following resources which will help to kick-start your node.js addiction (the first hit is free):

Aside from the syntactic sugar that is immediately familiar to legions of web developers regardless of platform or language religion, you can’t come across any literature or talk on Node.js without a mention of the fact that what makes it all so wonderful is the fact that it is event-driven and single-threaded.

It is evident to me that there are either a lot of people that are much smarter than me and just instantly get that statement, as well as people that blindly accept it. Then there are those who haven’t slept well for the last week trying to figure out what the hell that means and how it’s different.

Event-driven. So what?

I’ve written lots of asynchronous code and designed many messaging solutions on event-driven architectures. As a Microsoft guy, I’m pretty familiar with writing imperative code using Begin/End, AsyncCallbacks, WaitHandles, IHttpAyncHandlers, etc. I’ve opted for ever permutation of WCF instancing and concurrency depending on the scenario at hand, and written some pretty slick WF 4 workflows that parallelize activities (yes, I know, its not really truly parallel, but in most cases, close enough). My point is, I get async and eventing, so what’s the big deal and how is Node any different than this, or the nice new async language features coming in C# 5?

IIS and .NET as a Learning Model

When learning something new, it is a tremendous advantage to know nothing about the topic and start fresh. Absent of that, it is usually helpful to have a familiar model that you can reference that helps you to compare and contrast a new concept, progressively iterating to understand the similarities and differences. As a career-long enterprise guy, working almost exclusively on the Microsoft platform, my model is IIS/WAS and .NET., so let’s summarize how a modern web server like IIS 7+ works which will provide the necessary infrastructure to help frame how .NET’s (or Java) execution model works.

As described on Learn.IIS.net, the following list describes the request-processing flow that is shown to your right:

- When a client browser initiates an HTTP request for a resource on the Web server, HTTP.sys intercepts the request.

- HTTP.sys contacts WAS to obtain information from the configuration store.

- WAS requests configuration information from the configuration store, applicationHost.config.

- The WWW Service receives configuration information, such as application pool and site configuration.

- The WWW Service uses the configuration information to configure HTTP.sys.

- WAS starts a worker process for the application pool to which the request was made.

- The worker process processes the request and returns a response to HTTP.sys.

- The client receives a response.

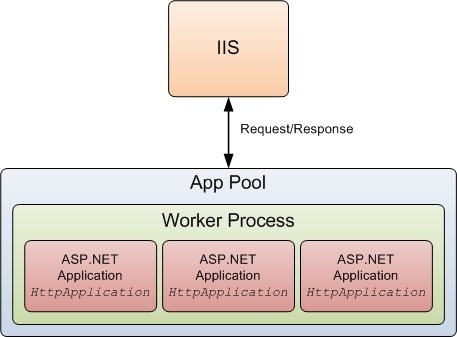

Step 7 is where your application (which might be an ASP.NET MVC app, a WCF or WF service) comes in. When WAS starts a worker process (w3wp.exe) the worker process allocates a thread for loading and executing your application. This is analogous to starting a program and inspecting the process in Windows Task Manager. If you open 3 instances of the program, you will see 3 processes in task manager and each process will have a minimum of one thread. If an unhandled exception occurs in the program, the entire process is torn down, but it doesn’t affect other processes because they are isolated for each other.

Processes, however, are not free. Starting and maintaining a process requires CPU time and memory, both of which are finite, and more processes and threads require more resources.

.NET improves resource consumption and adds an additional degree of isolation through Application Domains or AppDomains. AppDomains live within a process and your application runs inside an AppDomain.

K. Scott Allen summarizes the relationship between the worker process and AppDomains quite nicely:

You’ve created two ASP.NET applications on the same server, and have not done any special configuration. What is happening?

A single ASP.NET worker process will host both of the ASP.NET applications. On Windows XP and Windows 2000 this process is named aspnet_wp.exe, and the process runs under the security context of the local ASPNET account. On Windows 2003 the worker process has the name w3wp.exe and runs under the NETWORK SERVICE account by default.

An object lives in one AppDomain. Each ASP.NET application will have it’s own set of global variables: Cache, Application, and Session objects are not shared. Even though the code for both of the applications resides inside the same process, the unit of isolation is the .NET AppDomain. If there are classes with shared or static members, and those classes exist in both applications, each AppDomain will have it’s own copy of the static fields – the data is not shared. The code and data for each application is safely isolated and inside of a boundary provided by the AppDomain

In order to communicate or pass objects between AppDomains, you’ll need to look at techniques in .NET for communication across boundaries, such as .NET remoting or web services.

Here is a logical view borrowed from a post by Shiv Kumar that helps to show this relationship visually:

As outlined in steps 1 – 7 above, when a request comes in, IIS uses an I/O thread to dispatch the request off to ASP.NET. ASP.NET immediately sends the request to the CLR thread pool which returns immediately with a pending status. This frees the IIS I/O thread to handle the next request and so on. Now, if there is a thread available in the thread pool, life is good, and the work item is executed, returning back to the I/O thread in step 7. However, if all of the threads in the thread pool are busy, the request will queue until a CLR thread pool thread is available to process the work item, and if the queue length is too high, users will receive a somewhat terse request to go away and come back later.

This is a bit of an extreme trivialization of what happens. There are a number of knobs that can be set in IIS and ASP.NET that allow you to tune the number of concurrent requests, threads and connections that affect both IIS and the CLR thread pool. For example, the IIS thread pool has a maximum thread count of 256. ASP.NET initializes its thread pool to 100 threads per processor/core. You can adjust things like the maximum number of concurrent requests per CPU (which defaults to 5000) and maximum number of connections of 12 per CPU but this can be increased. This stuff is not easy to grasp, and system administrators dedicate entire careers to getting this right. In fact, it is so hard, that we are slowly moving away from having to manage this ourselves and just paying some really smart engineers to do it for us, at massive scale.

That said, if you are curious or want to read more, Thomas Marquardt and Fritz Onion cover IIS and CLR threading superbly in their respective posts.

Back to the example. If all of your code in step 7 (your app) is synchronous, then the number of concurrently executing requests is equal to the number of threads available to concurrently execute your requests and if you exceed this, your application/service will simply grind to a halt.

This is why asynchronous programming is so important. If your application/service (that runs in step 7) is well designed, and makes efficient use of IO by leveraging async so that work is distributed across multiple threads, then there are naturally more threads always ready to do work because the the total processing time of a request becomes that of the longest running operation instead of the sum of all operations. Do this right, and your app can scale pretty darn well as long as there are threads available to do the work.

However, as I mentioned, these threads are not free and in addition, there is a cost in switching from the kernel thread to the IIS thread and the CLR thread which results in additional CPU cycles, memory and, latency.

It is evident that to minimize latency, you apps must perform the work they need to as quickly as possible to keep threads free and ready to do more work. Leveraging asynchrony is key.

Even in era where scaling horizontally is simply a matter of pushing a button and swiping a credit card, an app that scales poorly on premise will scale poorly in the cloud, and both are expensive. Moreover, having written a lot of code, and worked with and mentored many teams in my career, I’ll admit that writing asynchronous code is just plain hard. I’ll even risk my nerd points in admitting that given the choice, I’ll write synchronous code all day long if I can get away with it.

So, what’s so different about Node?

My biggest source of confusion in digging into Node is the assertion that it is event-based and single threaded.

As we’ve just covered in our learning model, IIS and .NET are quite capable of async, but it certainly can’t do that on a single thread.

Well, guess what? Neither can Node. Wait, there’s more.

If you think about it for just a few seconds, highly concurrent, event-driven and single threaded do not mix. Intentional or otherwise, “single threaded” is a red herring and something that has simply been propagated over and over. Like a game of telephone, it’s meaning has been distorted and this is the main thing that was driving me NUTS.

Let’s take a closer look.

In Node, an program/application is created in a process, just like in the .NET world. If you run Node on Windows, you can see an instance of the process for each .js program you have running in Task Manager.

What makes Node unique is *not* that it is single-threaded, it’s the way in which it manages event-driven/async for you and this where the concept of an Event Loop comes in.

To provide an example, to open a WebSocket server that is compliant with the latest IETF and W3C standards, you write code like this written by my friend Adam Mokan:

1: var ws = require('websocket.io')

2: , server = ws.listen(3000)

3:

4: server.on('connection', function (socket) {

5:

6: console.log('connected to client');

7: socket.send('You connected to a web socket!');

8:

9: socket.on('message', function (data) {

10: console.log('nessage received:', data);

11: });

12:

13: socket.on('close', function () {

14: console.log('socket closed!');

15: });

16: });

As soon as ws.listen(3000) executes, a WebSocket server is created on a single thread which listens continuously on port 3000. When an HTML5 WebSocket compliant client connects to it, it fires the ‘connection’ event which the loop picks up and immediately publishes to the thread pool (see, I told you it was only half the story), and is ready to receive the next request. Thanks to the V8 Engine, this happens really, really fast.

What’s cool is that the program *is* the server. It doesn’t require a server like IIS or Apache to delegate requests to it. Node is fully capable of hosting an HTTP or TCP socket, and it does do this on a single thread. On the surface, this is actually quite similar to WCF which can be hosted in any CLR process or IIS, and to take this analogy one step further, you could configure a self-hosted WCF service as a singleton with multiple concurrency for a similar effect. But, as you well know, there is a ton of work that now needs to be done from a synchronization perspective and if you don’t use async to carry out the work, your performance will pretty much suck.

Wait! You say. Other than an (arguably) easier async programming model, how is this different than IIS/WAS and ASP.NET or WCF? Take a look at this drawing from from a great post by Aarron Stannard who just happens to be a Developer Evangelist at Microsoft:

As you can see, there is more than one thread involved. I don’t mean this to sound like a revelation, but it is a necessary refinement to explain how the concurrency is accomplished. But, and it’s a BIG but, unlike .NET, you don’t have a choice but to write your code asynchronously. As you can see in the code sample above, you simply can’t write synchronous code in Node. The work for each event is delegated work to an event handler by the loop immediately after the event fires . The work is picked up by a worker thread in the thread pool and then calls back to the event loop which send the request on its way. It is kind of subtle, but the single thread is *almost* never busy and when it is, it is only busy for a very short period of time.

This is different from our model in at least 3 ways I can think of:

- Your code is aysnc by default, period.

- There is no context switching as the Event Loop simply publishes and subscribes to the thread pool.

- The Event Loop never blocks.

Your code is aysnc by default, period.

This can’t be understated. Writing asynchronous code is hard, and given the option, most of us won’t. In a thread-based approach, particularly where all work isn’t guaranteed to be asynchronous, latency can become a problem. This isn’t optional in Node, so you either learn async or go away. Since it’s JavaScript, the language is pretty familiar to a very, very wide range of developers.

Node is very low level, but modules and frameworks like Express add sugar on top of it that help take some of the sting out of it and modules like Socket.IO and WebSocket.IO are pure awesome sauce.

There is no context switching as the Event Loop simply publishes and subscribes to the thread pool

I am not a threading expert, but simple physics tells me that that 0 thread hops is better than a minimum of 3.

I guess this might be analogous to a hypothetical example where HTTP.sys is the only gate, and WWW Publishing Service, WAS, ASP.NET are no longer in play, but unless HTTP.sys was changed from a threaded approach for concurrency to an Event Loop, I’m guessing it wouldn’t necessarily be apples to apples.

With Node, while there are worker threads involved, since they are each carrying out one and only one task at a time asynchronously, the CPU and memory footprint should be lower than a multi-treaded scenario, since less threads are required. This tends to be better for scalability in a highly concurrent environment, even on commodity hardware.

The Event Loop never blocks.

This is still something I’m trying to get my head around, but conceptually, what appears to be fundamentally different is that the Event Loop always runs on the same, single I/O thread. It is never blocking, but instead waits for something to happen (a connection, request, message, etc.) before doing anything, and then, like a good manager, simply delegates the work to an army of workers who report back when the work is done. In other words, the worker threads do all the work, while the Event Loop just kind of chills waiting for the next request and while it is waiting, it consumes 0 resources.

One of the things that I am not clear on is that when the callbacks are serialized back to the loop, only one callback can be handled at one time, so, if a request comes in at the exact same time that the loop is temporarily busy with the callback, will that request block, even if just for the slightest instant?

So?

Obviously, still being very new to Node, I have a ton to learn, but it is clear that for highly concurrent, real-time scenarios, Node has a lot of promise.

Does this mean that I’m abandoning .NET for Node? Of course not. For one, it will take a few years to see how things pan out in the wild, but the traction that Node is getting can’t be ignored, and it could very well signal the shift to event-based server programming and EDA in the large.

I’d love to know what you think, and as I mentioned in the introduction, welcome your comments and feedback.

Resources: